Deepfake abuse of underage Australians doubles, sparking national warning from eSafety Chief

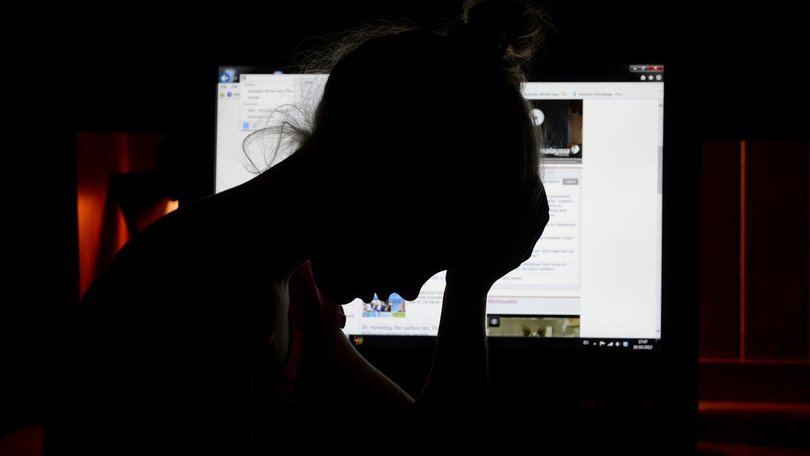

Reports of deepfake abuse targeting underage Australians are rising fast, with warnings it’s far worse than we know.

Sharing of explicit deepfake images of underage Australians has doubled in the past 18 months, prompting warnings from government and education leaders.

Figures released on Friday show complaints to the federal eSafety Commissioner’s image-based abuse reporting line have surged, with four out of five cases involving female victims.

Commissioner Julie Inman Grant believes the rapid rise in reporting among young people may only reveal part of the problem, warning the numbers do not represent “the whole picture.”

Sign up to The Nightly's newsletters.

Get the first look at the digital newspaper, curated daily stories and breaking headlines delivered to your inbox.

By continuing you agree to our Terms and Privacy Policy.“Anecdotally, we have heard from school leaders and education sector representatives that deepfake incidents are occurring more frequently, particularly as children are easily able to access and misuse nudity apps in school settings,” she said.

Deepfakes refer to digitally altered images of a person’s face or body, and young women and girls are often targeted in a sexual manner.

The use of artificial intelligence has made accessibility much easier for perpetrators.

“With just one photo, these apps can nudify the image with the power of AI in seconds,” Ms Inman Grant warns.

“Alarmingly, we have seen these apps used to humiliate, bully and sexually extort children in the school yard and beyond. There have also been reports that some of these images have been traded among school children in exchange for money.”

It’s a deeply concerning trend, Asher Flynn, Associate Professor of Criminology at Monash University, says.

She said the situation is complex and stressed that responsibility for addressing the issue does not lie solely with leaders, students, teachers, or parents, but also with major tech companies.

“(We need) to hold tech companies and digital platforms more accountable,” Dr Flynn told AAP.

“We can do this by not allowing advertisement of freely accessible apps that you can use to de-clothe people or to nudify them.”

She acknowledged that some progress is being made, but emphasised the need for clearer and stricter regulations around what can be promoted and accessed online.

Educating parents and children to identify and understand the complexity of deepfakes is also vital, Dr Flynn says.

“These technologies are available and we can’t ignore them,” Dr Flynn says.

“It’s really important to also have that round table conversation, so everyone knows this is what can happen and what the consequences of doing that are for someone.”

Laws cracking down on the sharing of sexually explicit AI-generated images and deepfakes without consent were recently introduced to federal parliament.

Meanwhile, multiple reports have emerged of deepfake images being circulated in schools across the country, including an incident where explicit deepfake images of 50 Melbourne schoolgirls were created and shared online last year.

1800 RESPECT (1800 737 732)

National Sexual Abuse and Redress Support Service 1800 211 028