WASHINGTON POST: Is Artificial Intelligence rewiring our minds? Effects of ChatGPT, AI on cognitive ability

THE WASHINGTON POST: Scientists are probing the cognitive cost of AI chatbots.

In our daily lives, the use of artificial intelligence programs such as ChatGPT is obvious. Students employ them to churn out term papers. Office workers ask them to organise calendars and help write reports. Parents prompt them to create personalised bedtime stories for toddlers.

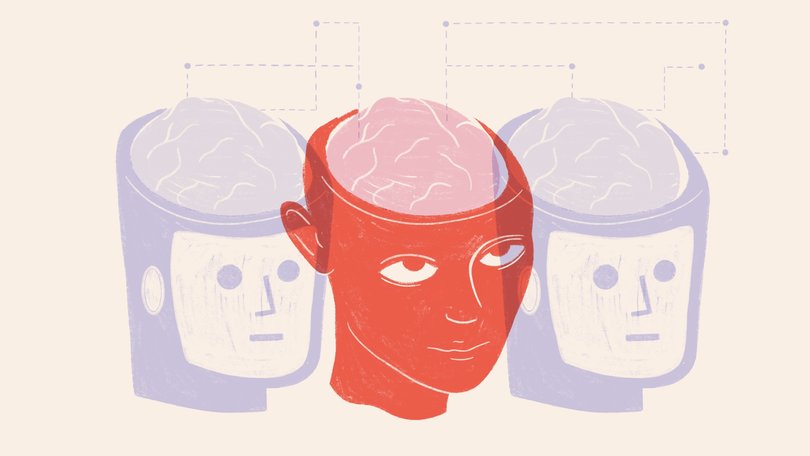

Inside our brains, how the persistent use of AI molds the mind remains unclear.

As our reliance on having vast knowledge rapidly synthesized at our fingertips increases, scientists are racing to understand how the frequent use of large language model programs, or LLMs, is affecting our brains — probing worries that they weaken cognitive abilities and stifle the diversity of our ideas.

Sign up to The Nightly's newsletters.

Get the first look at the digital newspaper, curated daily stories and breaking headlines delivered to your inbox.

By continuing you agree to our Terms and Privacy Policy.Headlines proclaiming that AI is making us stupid and lazy went viral this month after the release of a study from MIT Media Lab. Though researchers caution that this study and others across the field have not drawn hard conclusions on whether AI is reshaping our brains in pernicious ways, the MIT work and other small studies published this year offer unsettling suggestions.

One UK survey study of more than 600 people published in January found “significant negative correlation between the frequent use of AI tools and critical thinking abilities”, as younger users in particular often relied on the programs as substitutes, not supplements, for routine tasks.

The University of Pennsylvania’s Wharton School published a study last week which showed that high school students in Turkey with access to a ChatGPT-style tutor performed significantly better solving practice math problems. But when the program was taken away, they performed worse than students who had used no AI tutor.

And the MIT study that garnered massive attention — and some backlash — involved researchers who measured brain activity of mostly college students as they used ChatGPT to write SAT-style essays during three sessions.

Their work was compared to others who used Google or nothing at all. Researchers outfitted 54 essay writers with caps covered in electrodes that monitor electrical signals in the brain.

The EEG data revealed writers who used ChatGPT exhibited the lowest brain engagement and “consistently underperformed at neural, linguistic, and behavioural levels”, according to the study.

Ultimately, they delivered essays that sounded alike and lacked personal flourishes. English teachers who read the papers called them “soulless”.

The “brain-only” group showed the greatest neural activations and connections between regions of the brain that “correlated with stronger memory, greater semantic accuracy, and firmer ownership of written work”. In a fourth session, members from the ChatGPT group were asked to rewrite one of their previous essays without the tool but participants remembered little of their previous work.

Sceptics point to myriad limitations. They argue neural connectivity measured by EEG doesn’t necessarily indicate poor cognition or brain health. For the study participants, the stakes were also low — entrance to college, for example, didn’t depend on completing the essays. Also, only 18 participants returned for the fourth and final session.

Lead MIT researcher Nataliya Kosmyna acknowledges that the study was limited in scope and, contrary to viral internet headlines about the paper, was not gauging whether ChatGPT is making us dumb.

The paper has not been peer reviewed but her team released preliminary findings to spark conversation about the impact of ChatGPT, particularly on developing brains, and the risks of the Silicon Valley ethos of rolling out powerful technology quickly. “Maybe we should not apply this culture blindly in the spaces where the brain is fragile,” Ms Kosmyna said in an interview.

OpenAI, the California company that released ChatGPT in 2022, did not respond to requests for comment. (The Washington Post has a content partnership with OpenAI.)

Michael Gerlich, who spearheaded the UK survey study, called the MIT approach “brilliant” and said it showed that AI is supercharging what is known as “cognitive off-loading”, where we use a physical action to reduce demands on our brain. But instead of off-loading simple data — like phone numbers we once memorised but now store in our phones — people relying on LLMs off-load the critical thinking process. His study suggested younger people and those with less education are quicker to off-load critical thinking to LLMs because they are less confident in their skills. (“It’s become a part of how I think,” one student later told researchers.)

“It’s a large language model. You think it’s smarter than you. And you adopt that,” said Professor Gerlich from SBS Swiss Business School in Zurich.

Still, Ms Kosmyna, Professor Gerlich and other researchers warn against drawing sweeping conclusions — no long-term studies have been completed on the effects on cognition of the nascent technology.

Researchers also stress that the benefits of AI may ultimately outweigh risks, freeing our minds to tackle bigger and bolder thinking.

Deep-rooted fears

Fear of technology rewiring our brains is nothing new. Socrates warned writing would make humans forgetful. In the mid-1970s, teachers fretted that cheap calculators might strip students of their abilities to do simple math. More recently, the rise of search engines spurred fears of “digital amnesia”.

“It wasn’t that long ago that we were all panicking that Google is making us stupid and now that Google is more part of our everyday lives, it doesn’t feel so scary,” said Sam J. Gilbert, professor of cognitive neuroscience at University College London. “ChatGPT is the new target for some of the concerns. We need to be very careful and balanced in the way that we interpret these findings” of the MIT study.

The MIT paper suggests that ChatGPT essay writers illustrate “cognitive debt”, a condition in which relying on such programs replaces the effortful cognitive processes needed for independent thinking. Essays become biased and superficial. In the long run, such cognitive debt might make us easier to manipulate and stifle creativity.

But Professor Gilbert argues that the MIT study of essay writers could also be viewed as an example of what he calls “cognitive spillover”, or discarding some information to clear mental bandwidth for potentially more ambitious thoughts. “Just because people paid less mental effort to writing the essays that the experimenters asked them to do, that’s not necessarily a bad thing,” he said. “Maybe they had more useful, more valuable things they could do with their minds.”

Experts suggest that perhaps AI, in the long run and deployed right, will prove to augment, not replace critical thinking.

The Wharton School study on nearly 1000 Turkish high school students also included a group that has access to a ChatGPT-style tutor program with built-in safeguards that provided teacher-designed hints instead of giving away answers. Those students performed extremely well and did roughly the same as students who did not use AI when they were asked to solve problems unassisted, the study showed.

More research is needed into the best ways to shape user behaviours and create LLM programs to avoid damaging critical thinking skills, said Professor Aniket Kittur from Carnegie Mellon University’s Human-Computer Interaction Institute. He is part of a team creating AI programs designed to light creative sparks, not churn out finished but bland outputs.

One program, dubbed BioSpark, aims to help users solve problems through inspiration in the natural world — say, for example, creating a better bike rack to mount on cars. Instead of a bland text interface, the program might display images and details of different animal species to serve as inspiration, such as the shape of frog legs or the stickiness of snail mucous that could mirror a gel to keeps bicycles secure.

Users can cycle through relevant scientific research, saving ideas a la Pinterest, then asking more detailed questions of the AI program.

“We need both new ways of interacting with these tools that unlocks this kind of creativity,” Professor Kittur said. “And then we need rigorous ways of measuring how successful those tools are. That’s something that you can only do with research.”

Research into how AI programs can augment human creativity is expanding dramatically but doesn’t receive as much attention because of the technology-wary zeitgeist of the public, said Sarah Rose Siskind, a New York-based science and comedy writer who consults with AI companies.

She believes the public needs better education on how to use and think about AI — she created a video on how she uses AI to expand her joke repertoire and reach newer audiences. She also has a forthcoming research paper exploring ChatGPT’s usefulness in comedy.

“I can use AI to understand my audience with more empathy and expertise than ever before,” Ms Siskind said. “So there are all these new frontiers of creativity. That really should be emphasised.”

© 2025 , The Washington Post.

Originally published as Is AI rewiring our minds? Scientists probe cognitive cost of chatbots