KATE EMERY: The worrying ways AI is teaching your kids about race

KATE EMERY: When kids are old enough to stop listening to their parents (and not yet old enough to start listening again), it’s their friends who’ll feed them social cues about right and wrong, or AI.

Every parent knows how important your kid’s choice of friends can be.

It’s why we steer our progeny towards the sweet and the studious and away from the kid starting fires at the back of the classroom.

When kids are old enough to stop listening to their parents (and not yet old enough to start listening again), it’s their friends who’ll feed them social cues about right and wrong.

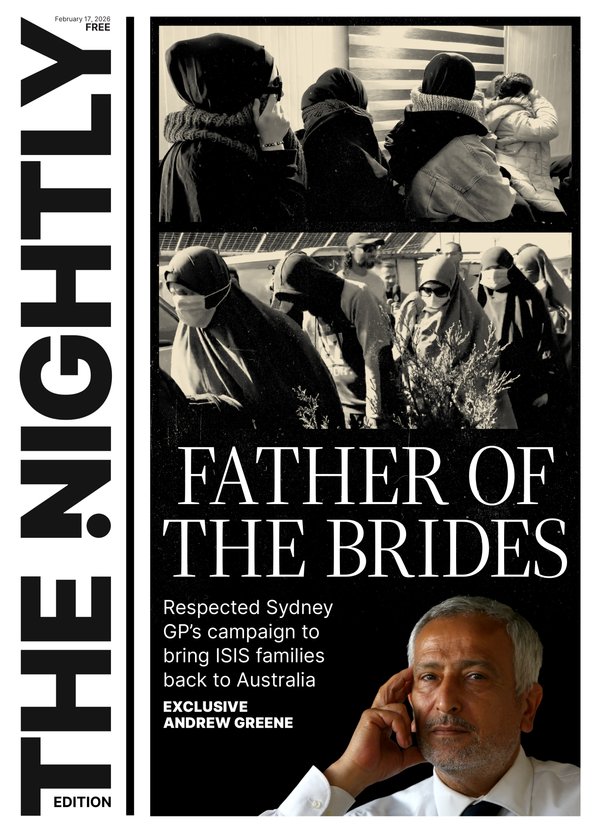

Sign up to The Nightly's newsletters.

Get the first look at the digital newspaper, curated daily stories and breaking headlines delivered to your inbox.

By continuing you agree to our Terms and Privacy Policy.So what do you do when your kid’s friend seems kind of racist, sexist and goes around spewing ill-informed rubbish? What if their idea of what society should look like is stuck in some cracked-out Norman Rockwell version of the 1950s that never existed?

Worse, what if the friend basically lived in your kid’s bedroom and went to school with them every day?

Oh, and what if the friend isn’t a person so much as a generative artificial intelligence model?

That part’s important, sorry, I should have mentioned that first.

This is (kind of) what’s happening with the new breed of AI tools that are squirming their way into every piece of computer software and social media platform you can imagine, as big tech companies scramble to justify the trillion-dollars they’re spend on this stuff.

When MS Paint — MS Paint! — now includes an AI option you know this thing is more ubiquitous than ants at a picnic.

The dangers of AI chatbots, the best known of which is Chat GPT, have been highlighted by tragic cases overseas, where people took their own lives after forming emotional attachments to chatbots who, in at least one case, seemed to encourage suicide.

Less attention has been paid to what AI programs like Midjourney, DALL-E, Firefly and Dream Studio — the ones that can create images with a simple text prompt — might be showing particularly our young kids.

It’s. . . not great.

Professor of Internet Studies at Curtin University Tama Leaver knows just what kind of offensive, stereotypical and sometimes just plain hallucinatory images these AI programs can produce. Some of his recent research involves experimenting with putting various prompts into various AI programs and seeing what images they produce.

The results tend to be racist, sexist and decidedly old-fashioned.

That’s not because the programs themselves are racist but because, as Professor Leaver kindly explained it to this columnist, they’ve been trained on the internet, which essentially contains the entire history of racism and sexism and some other pretty questionable other stuff.

Ask AI for an image of an “innocent child”, for example, and the chances are you’ll get a white-skinned, blonde-haired, blue-eyed poppet who could have appeared in a Hitler Youth recruitment poster.

Ask for an image of an “Australian father” and you get a slightly crusty Farmer Wants a Wife reject: a sun-bronzed, muscle man in an Akubra — possibly holding a koala (or, in one version I saw, a giant lizard for some reason).

A request for an image of an “Australian Family” produces a very white family that looks a bit like an unlikely collaboration between Rockwell and Grapes of Wrath-era John Steinbeck.

But insert the word “Indigenous” into that request and you get a bare-breasted woman, a whole lot of dark-skinned people sitting in red dirt and, in one case, some Native American-style feather headdresses.

Similar stereotypes hold true when you ask for images of an “Australian home” vs an Indigenous Australian home.

Guess which one AI thinks looks like a slum?

The big takeaway from this research is not that AI should be banned from schools and children (that horse has not so much bolted but fled the scene, taken a transcontinental flight and is now living abroad in a semi-detached apple orchard) but that we need to be aware of the biases that AI is bringing to the table — and to our kids.

Adults might be able to laugh at the fact that their phone’s idea of the world is uber white, ultra western and occasionally has too many fingers but kids don’t necessarily have the media literacy or the life experience to know better.

These AI products have been developed too quickly to have much in the way of guardrails.

Even the Australian Government, which is working on legislation to make this stuff safer for users, has a focus on “high-risk AI”, a category into which it is unlikely to place the programs that make the pretty pictures.

That means it’s up to the humans in the room to be aware of the biases baked into AI and to stop pretending that technology has ever been neutral.

It’s up to the teacher using AI to generate images for a story that the class is collectively creating to know that just asking the computer for images of “children” or “families” is likely to produce an image slightly whiter than the average Peppy Grove street party.

It’s up to adults to help kids, who may want to use AI for school or fun, that the technology they’re asking for answers has been raised not just on history books and contemporary media but on Reddit threads, 4Chan message boards and Facebook memes.