Elon Musk's Grok AI floods X with sexualised photos of women

Ministers across the world have raised concerns over explicit content of minors being generated using Elon Musk's AI tool Grok.

Julie Yukari, a musician based in Rio de Janeiro, posted a photo taken by her fiancé to the social media site X just before midnight on New Year’s Eve, showing her in a red dress snuggling in bed with her black cat, Nori.

The next day, somewhere among the hundreds of likes attached to the picture, she saw notifications that users were asking Grok, X’s built-in artificial intelligence chatbot, to digitally strip her down to a bikini.

The 31-year-old did not think much of it, she told Reuters, figuring there was no way the bot would comply with such requests.

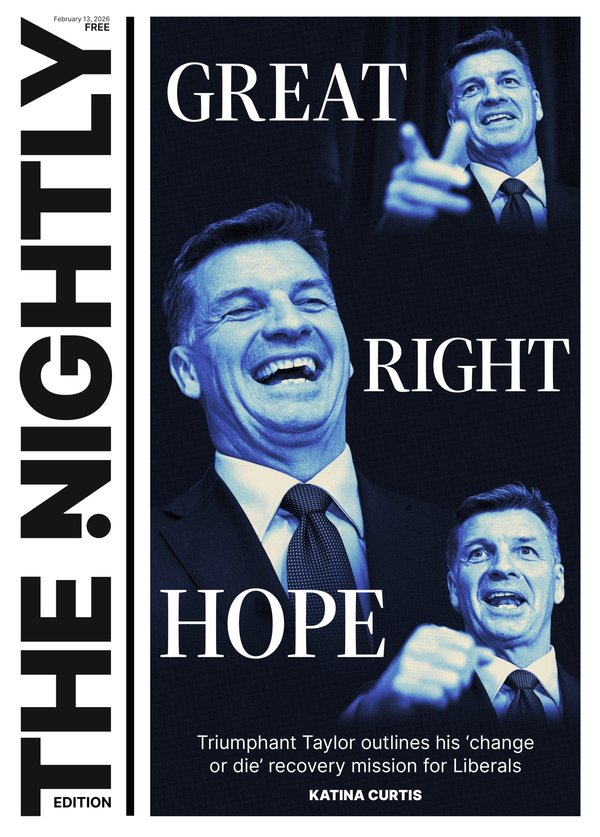

Sign up to The Nightly's newsletters.

Get the first look at the digital newspaper, curated daily stories and breaking headlines delivered to your inbox.

By continuing you agree to our Terms and Privacy Policy.She was wrong. Soon, Grok-generated pictures of her, nearly naked, wethat re circulating across the Elon Musk-owned platform.

Yukari’s experience is being repeated across X, a Reuters analysis found.

Reuters has also identified several cases where Grok created sexualised images of children.

X did not respond to a message seeking comment on Reuters’ findings.

In an earlier statement to the news agency about reports that sexualised images of children were circulating on the platform, X’s owner xAI said: “Legacy Media Lies.”

The flood of nearly nude images of real people has rung alarm bells internationally.

Ministers in France have reported X to prosecutors and regulators over the disturbing images, saying in a statement the “sexual and sexist” content was “manifestly illegal.”

India’s IT ministry said in a letter to X’s local unit that the platform failed to prevent Grok’s misuse by generating and circulating obscene and sexually explicit content.

The US Federal Communications Commission did not respond to requests for comment. The Federal Trade Commission declined to comment.

Musk appeared to poke fun at the controversy earlier on Friday, posting laugh-cry emojis in response to AI edits of famous people - including himself - in bikinis.

When one X user said their social media feed resembled a bar packed with bikini-clad women, Musk replied, in part, with another laugh-cry emoji.

Reuters could not determine the full scale of the surge.

AI-powered programs that digitally undress women - sometimes called “nudifiers” - have been around for years, but until now they were largely confined to the darker corners of the internet, such as niche websites or Telegram channels, and typically required a certain level of effort or payment.

X’s innovation - allowing users to strip women of their clothing by uploading a photo and typing the words, “hey @grok put her in a bikini” - has lowered the barrier to entry.

Three experts who have followed the development of X’s policies around AI-generated explicit content told Reuters that the company had ignored warnings from civil society and child safety groups - including a letter sent last year warning that xAI was only one small step away from unleashing “a torrent of obviously non consensual deepfakes.”

“In August, we warned that xAI’s image generation was essentially a nudification tool waiting to be weaponised,” said Tyler Johnston, the executive director of The Midas Project, an AI watchdog group that was among the letter’s signatories. “That’s basically what’s played out.”

Dani Pinter, the chief legal officer and director of the Law Centre for the National Centre on Sexual Exploitation, said X failed to pull abusive images from its AI training material and should have banned users requesting illegal content.

“This was an entirely predictable and avoidable atrocity,” Pinter said.

Lifeline 13 11 14

Fullstop Australia 1800 385 578

beyondblue 1300 22 4636