Online safety watchdog warns sophisticated AI-generated child sexual abuse material proliferating on open web

Sophisticated AI-generated child sexual abuse material proliferating on the ‘clear web’ is threatening to overwhelm law enforcement as analysts race to determine if the content features real children in danger.

Sophisticated AI-generated child sexual abuse material proliferating on the ‘clear web’ is threatening to overwhelm law enforcement agencies as analysts race to determine if the content features real children in danger.

An international online safety watchdog has revealed the general public is increasingly being exposed to “chilling” AI-generated child sexual abuse images and videos on publicly accessible parts of the internet.

The UK-based Internet Watch Foundation said the surge in AI-generated material has the potential to overwhelm and hinder law enforcement agencies around the world from identifying and rescuing real victims of child sexual abuse.

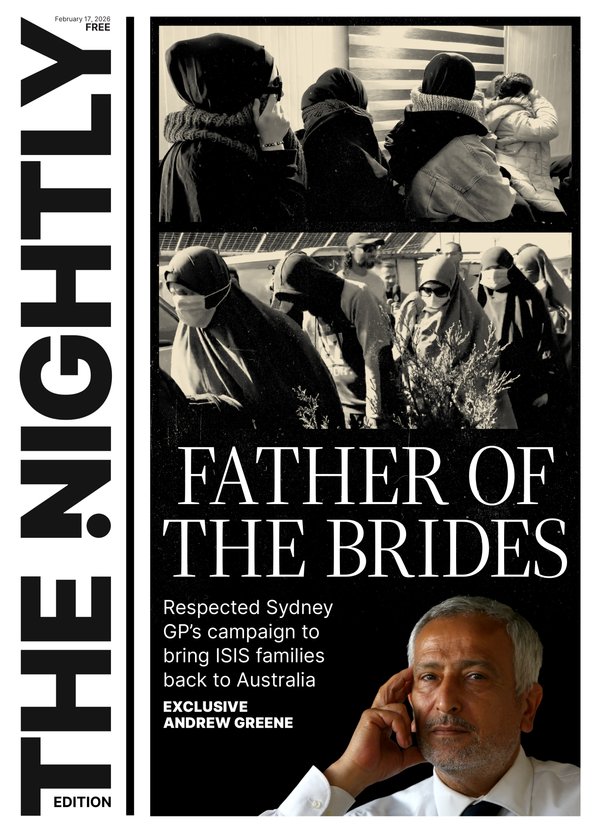

Sign up to The Nightly's newsletters.

Get the first look at the digital newspaper, curated daily stories and breaking headlines delivered to your inbox.

By continuing you agree to our Terms and Privacy Policy.The IWF also warned that the horrific imagery and vision of children being abused has become so realistic that even experts are finding it hard to distinguish which material features real children who are being exploited.

Rapid technological advancements have led to the creation of highly realistic AI-generated child sexual abuse material.

Perpetrators are using certain AI platforms that allow their perverted fantasies to be brought to life by computer-generated children.

Some AI image generators have in-built protections to prevent the production of harmful content but other AI tools, with just a few prompts, can create realistic child sexual abuse material in mere minutes.

The programs can also be used to create ‘deepfake’ sexually explicit images and videos by using a photograph of a real child to create computer-generated material.

In some cases, criminals have used this content to extort children and their families.

The amount of this illegal content reported to the IWF, Europe’s frontline against online child sexual abuse, in the past six months already exceeds the total for the previous year.

And 99 per cent of the abhorrent content reported was found on the ‘clear web’, as opposed to the ‘dark web’ which can only be accessed with certain browsers.

Most reports to IWF were made by members of the public who stumbled across the illegal material on sites such as forums or AI galleries. The rest was found and actioned by IWF analysts through proactive searching.

The IWF, which has a global remit, traces where child sexual abuse content is hosted so its analysts can act to have it swiftly removed.

Child abuse material, is still child abuse material, no matter what form it takes.

“This criminal content is not confined to mysterious places on the dark web,” said a senior IWF analyst who does not want to be named.

“Nearly all of the reports or URLs that we’ve dealt with that contained AI-generated child sexual abuse material were found on the clear web.

“I find it really chilling as it feels like we are at a tipping point and the potential is there for organisations like ourselves and the police to be overwhelmed by hundreds and hundreds of new images, where we don’t always know if there is a real child that needs help.”

Analysts warn that the public is not trained to cope with viewing AI-generated content of children being sexually abused, which they said can be as distressing as seeing real children being exploited.

IWF Interim Chief Executive Officer Derek Ray-Hill said AI-generated child abuse material is a significant and growing threat.

“People can be under no illusion that AI-generated child sexual abuse material causes horrific harm, not only to those who might see it but to those survivors who are repeatedly victimised every time images and videos of their abuse are mercilessly exploited for the twisted enjoyment of predators online,” he said.

“To create the level of sophistication seen in the AI imagery, the software used has also had to be trained on existing sexual abuse images and videos of real child victims shared and distributed on the internet.

“Recent months show that this problem is not going away and is in fact getting worse.”

Recently, a number of Australians have been arrested, charged and jailed for producing and soliciting this criminal content.

In late 2022, the National Centre for Missing and Exploited Children in the United States made multiple reports to the Australian Federal Police about an Australian-based user saving and downloading child abuse material from a website and social media platform.

the Tasmanian Joint Anti Child Exploitation Team identified the offender and raided his Gravelly Beach home, in the state’s Tamar Valley region, in May 2023.

An examination of his computer revealed hundreds of files containing CAM which included a significant amount generated by AI.

The man was subsequently arrested and charged.

Last year he pleaded guilty to possessing child abuse material obtained using a carriage service and using a carriage service to access child abuse material.

In March the 48-year-old was sentenced in the Launceston Supreme Court to two years in jail.

It was the first conviction in Tasmania relating to child exploitation material generated by AI.

“Child abuse material, is still child abuse material, no matter what form it takes,” said AFP Detective Sergeant Aaron Hardcastle.

“People may not be aware that online simulations, fantasy, text-based stories, animations and cartoons, including artificial intelligence-generated content depicting child sexual abuse are all still considered child abuse material under Commonwealth legislation.

“Whether the image is AI-generated or depicts a real child victim, the material is repulsive and the Tasmania JACET Team, along with the AFP and its law enforcement partners, will continue to identify and locate those sharing this abhorrent content and put them before the courts.”

In July, a Melbourne man was sentenced to 13 months in jail for online child abuse offences, including using an artificial intelligence program to produce child abuse images.

The Victorian JACET found the 48-year-old had used an AI image generation program and inputted text and images to create 793 realistic child abuse images.

Detective Superintendent Tim McKinney, from Victoria Police’s Cybercrime Division, said AI was an evolving issue.

“We are encountering a rise in the reporting of offending where AI is being used in the creation of child abuse material,” he said.

“In some instances, real children are being used to help create these images, objectifying these innocent victims, most of whom are unaware their images are being used in this way.

“AI that depicts child abuse material is illegal and punishable by up to 15 years imprisonment.”

eSafety Commissioner Julie Inman Grant told an AI Leadership Summit in Melbourne this week that generative AI technology was already being exploited by criminal organisations.

“Criminal gangs out of Nigeria and West Africa (are) using face-swapping technology in video-conferencing calls to execute sophisticated sexual extortion schemes targeting young Australian men between the ages of 18 and 24,” she said.

“We’ve seen a four-fold increase in reports since 2018.”

If you have information about people involved in child abuse, you are urged to contact the ACCCE at www.accce.gov.au/report.

If you know abuse is happening right now or a child is at risk, call police immediately on 000.