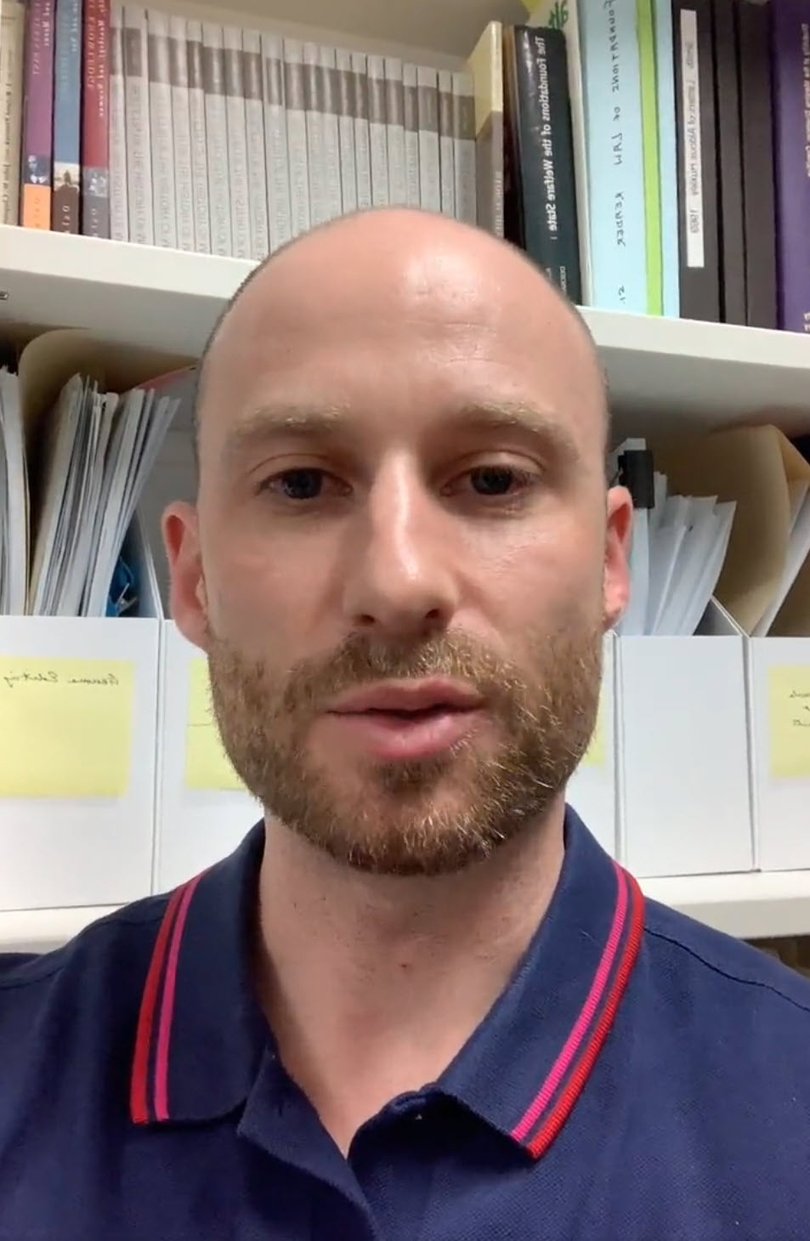

Law lecturer Christopher Rudge slams Deloitte’s government-funded report written with AI

Consulting firm Deloitte charged the Federal Government $440,000 for a report with made-up citations, fabricated quotations and advice inconsistent with the underlying research.

A law lecturer who discovered Deloitte charged the Federal Government $440,000 for error-ridden advice written with the help of artificial intelligence said the consulting firm had been “very unethical” and rules are need to ensure future advice comes from humans rather than computers.

Christopher Rudge, the deputy director of Sydney University’s Sydney Health Law research centre, found a report critiquing the use of computers to detect unemployment fraud had made up citations, fabricated quotations and provided advice inconsistent with the research cited. A Federal Court’s judge’s name was misspelled.

“In research it is highly unethical,” to use AI to write reports, Dr Rudge told The Nightly.

Sign up to The Nightly's newsletters.

Get the first look at the digital newspaper, curated daily stories and breaking headlines delivered to your inbox.

By continuing you agree to our Terms and Privacy Policy.“People lose their jobs and reputation. I think governments needs advice that is trustworthy, reliable and based on real knowledge. Going forward that needs to be clearly regulated.”

After being exposed, Deloitte provided a replacement report that was published Friday afternoon and acknowledged it was written with the assistance of Microsoft’s Azure software.

Growing problem

The case illustrates a growing problem across government, business, education and the law: AI software is becoming so widely used that it is even being deployed to help write official documents without fact checking by humans.

Deloitte declined to discuss the project for the Department of Employment and Workplace Relations, which said on Monday that the firm would repay the final payment under its contract. “The matter has been resolved directly with the client,” a Deloitte spokeswoman said.

Opposition workplace relations spokesman Tim Wilson issued a scathing statement that linked the report with what he said was the government’s failure to prevent corruption on building sites by the construction union.

“Turns out when the department takes their eye off the ball people abuse public funds whether it is consulting firms writing reports or organised crime gangs on Big Build projects, but the minister should really crack down on both,” he told The Nightly.

Non-existent book

The mistakes included ten references from The Rule of Law and Administrative Justice in the Welfare State, a book that does not exist, and a made-up quote from Federal Court judge Jennifer Davies, whose surname was spelt Davis.

The mistakes were first reported by the Financial Review newspaper, which was contacted by Dr Rudge after he realised that colleagues’ books had been used to support conclusions inconsistent with their work. He said it was ironic that a report critical of the use of computers to assess human behaviour was written in part by a computer.

The 234-page Deloitte report, which was first published in June, found the government computer system used to search for welfare fraud relies on 370 rules. Some of the rules were written over time to help the program work properly, and are called “IT system logic rules”, and do not reflect legal or policy objectives, Deloitte said.

After the mistakes became public, Deloitte had the report checked and confirmed some of the some footnotes and references were wrong. The Government said the changes did not effect Deloitte’s recommendations, which include upgrading the software and introducing a separate system for vulnerable people on welfare.

“The department continues to focus efforts on addressing the substance of the report, as part of broader work to assure the integrity of the targeted compliance framework,” a spokesperson said Monday.

Examples of AI software producing untrue information, referred to as hallucinations, are becoming more common. A Chicago newspaper recently recommended a list of books for summer that do not exist, and a new database of US legal decisions citing fake evidence created by AI already has 30 examples.

Google’s DeepMind unit is working on AI software to fact check other AI programs. A recent study of product recommendations by AI engines found that ChatGPT’s main sources of information are Reddit and Wikipedia.