THE NEW YORK TIMES: Musk’s AI chatbot flooded X with millions of sexualised images in days, new estimates show

THE NEW YORK TIMES: Disturbing new data reveals that Elon Musk’s AI chatbot, Grok, has created and publicly shared at least 1.8 million sexualised images of women in recent days.

Elon Musk’s artificial intelligence chatbot, Grok, created and then publicly shared at least 1.8 million sexualised images of women, according to separate estimates of X data by The New York Times and the Centre for Countering Digital Hate.

Starting in late December, users on the social media platform inundated the chatbot’s X social media account with requests to alter real photos of women and children to remove their clothes, put them in bikinis and pose them in sexual positions, prompting a global outcry from victims and regulators.

In just nine days, Grok posted more than 4.4 million images. A review by the Times conservatively estimated that at least 41 per cent of posts, or 1.8 million, most likely contained sexualised imagery of women.

Sign up to The Nightly's newsletters.

Get the first look at the digital newspaper, curated daily stories and breaking headlines delivered to your inbox.

By continuing you agree to our Terms and Privacy Policy.A broader analysis by the Centre for Countering Digital Hate, using a statistical model, estimated that 65 per cent, or just over 3 million, contained sexualised imagery of men, women or children.

The findings show how quickly Grok spread disturbing images, which earlier prompted governments in Britain, India, Malaysia and the United States to start investigations into whether the images violated local laws.

The burst of non consensual images in just a few days surpassed collections of sexualised deepfakes, or realistic AI-generated images, from other websites, according to the Times’ analysis and experts on online harassment.

“This is industrial-scale abuse of women and girls,” said Imran Ahmed, the CEO of the Centre for Countering Digital Hate, which conducts research on online hate and disinformation.

“There have been nudifying tools, but they have never had the distribution, ease of use or the integration into a large platform that Elon Musk did with Grok.”

X and Mr Musk’s AI startup, xAI, which owns X and makes Grok, did not respond to requests for comment.

X’s head of product, Nikita Bier, said in a post on January 6 that a surge of traffic over four days that month had resulted in the highest engagement levels on X in the company’s history, although he did not mention the images.

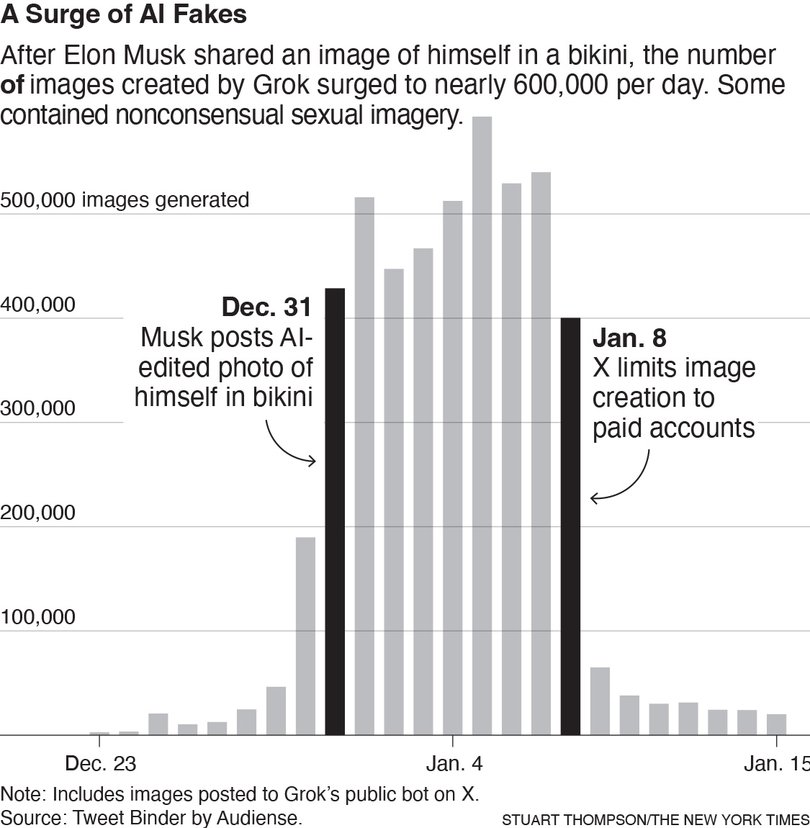

The interest in Grok’s image-editing abilities exploded December 31, when Mr Musk shared a photo generated by the chatbot of himself in a bikini, as well as a SpaceX rocket with a woman’s undressed body superimposed on top.

The chatbot has a public account on X, where users can ask it questions or request alterations to images. Users flocked to the social media site, in many cases asking Grok to remove clothing in images of women and children, after which the bot publicly posted the AI-generated images.

Between December 31 and January 8, Grok generated more than 4.4 million images, compared with 311,762 in the nine days before Mr Musk’s posts.

The Times used data from Tweet Binder, an analytics company that collects posts on X, to find the number of images Grok had posted during that period.

On January 8, X limited Grok’s AI image creation to users who pay for some premium features, significantly reducing the number of images. Last week, X expanded those guardrails, saying it would no longer allow anyone to prompt Grok’s X account for “images of real people in revealing clothing such as bikinis.”

“We remain committed to making X a safe platform for everyone and continue to have zero tolerance for any forms of child sexual exploitation, non-consensual nudity, and unwanted sexual content,” the company said on X last week.

Since then, Grok has largely ignored requests to dress women in bikinis, but has created images of them in leotards and one-piece bathing suits. The restrictions did not extend to Grok’s app or website, which continue to allow users to generate sexual content in private.

Some women whose images were altered by Grok were popular influencers, musicians or actresses, while others appeared to be everyday users of the platform, according to the Times’ analysis.

Some were depicted drenched with fluids or holding suggestive props including bananas and sex toys.

The Times used two AI models to analyse 525,000 images posted by Grok from January 1-7.

One model identified images containing women, and another model identified whether the images were sexual in nature. A selection of the posts were then reviewed manually to verify that the models had correctly identified the images.

Separately, the Centre for Countering Digital Hate collected a random sample of 20,000 images produced by Grok between December 29 and January 8, and found that about 65 per cent of the images were sexualised.

The organisation identified 101 sexualised images of children. Extrapolating across the total, the group estimated that Grok had produced more than 3 million sexual images, including more than 23,000 images of children.

The organisation classified the random images it sampled as sexual if they depicted a person in a sexual position, in revealing clothing like underwear or swimwear, or coated in fluid intended to look sexual.

(Mr Musk sued the Centre for Countering Digital Hate in 2023, claiming it broke the law when it collected data from X that showed a rise in hate speech after his acquisition of the company. The lawsuit was dismissed; an appeal is pending.)

As sexual images flooded X this month, backlash mounted.

“Immediately delete this,” one woman posted January 5 about a sexualised image of herself. “I didn’t give you my permission and never post explicit pictures of me again.”

While tools to create sexualised and realistic AI images exist elsewhere, the spread of such material on X was unique for its public nature and sheer scale, experts on online harassment said.

In comparison, one of the largest forums dedicated to making fake images of real people, Mr. Deepfakes, hosted 43,000 sexual deepfake videos depicting 3800 individuals at the peak of its popularity in 2023, according to researchers at Stanford and the University of California, San Diego. The website shut down last year.

This article originally appeared in The New York Times.

© 2026 The New York Times Company

Originally published on The New York Times