The Economist: Why do Nvidia’s chips dominate the AI market?

The firm has three big advantages.

No other firm has benefited from the boom in artificial intelligence (AI) as much as Nvidia.

Since January 2023 the chipmaker’s share price has surged by almost 450per cent. With the total value of its shares approaching $2trn, Nvidia is now America’s third-most valuable firm, behind Microsoft and Apple.

Its revenues for the most recent quarter were $22bn, up from $6bn in the same period last year.

Sign up to The Nightly's newsletters.

Get the first look at the digital newspaper, curated daily stories and breaking headlines delivered to your inbox.

By continuing you agree to our Terms and Privacy Policy.Most analysts expect that Nvidia, which controls more than 95 per cent of the market for specialist AI chips, will continue to grow at a blistering pace for the foreseeable future. What makes its chips so special?

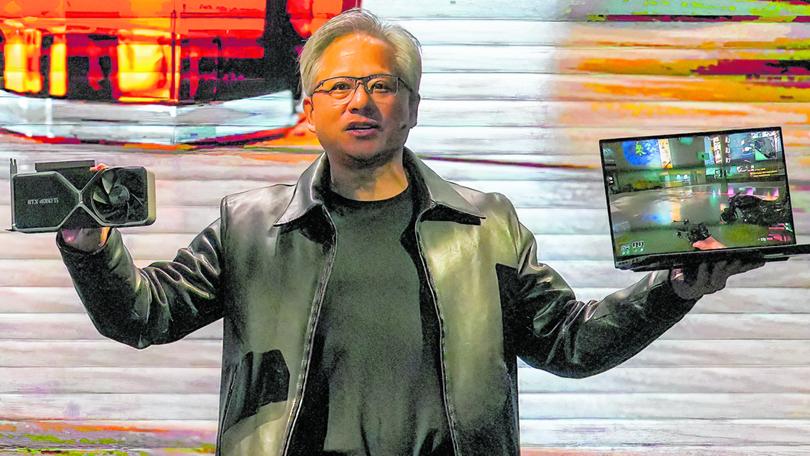

Nvidia’s AI chips, also known as graphics processor units (GPUs) or “accelerators”, were initially designed for video games.

They use parallel processing, breaking each computation into smaller chunks, then distributing them among multiple “cores”—the brains of the processor—in the chip.

This means that a GPU can run calculations far faster than it would if it completed tasks sequentially. This approach is ideal for gaming: lifelike graphics require countless pixels to be rendered simultaneously on the screen. Nvidia’s high-performance chips now account for four-fifths of gaming GPUs.

Happily for Nvidia, its chips have found much wider uses: cryptocurrency mining, self-driving cars and, most important, training of AI models.

Machine-learning algorithms, which underpin AI, use a branch of deep learning called artificial neural networks. In these networks computers extract rules and patterns from massive datasets.

Training a network involves large-scale computations—but because the tasks can be broken into smaller chunks, parallel processing is an ideal way to speed things up. A high-performance GPU can have more than a thousand cores, so it can handle thousands of calculations at the same time.

Once Nvidia realised that its accelerators were highly efficient at training AI models, it focused on optimising them for that market. Its chips have kept pace with ever more complex AI models: in the decade to 2023 Nvidia increased the speed of its computations 1000-fold.

But Nvidia’s soaring valuation is not just because of faster chips. Its competitive edge extends to two other areas.

One is networking. As AI models continue to grow, the data centres running them need thousands of GPUs lashed together to boost processing power (most computers use just a handful).

Nvidia connects its GPUs through a high-performance network based on products from Mellanox, a supplier of networking technology that it acquired in 2019 for $7bn. This allows it to optimise the performance of its network of chips in a way that competitors can’t match.

Nvidia’s other strength is Cuda, a software platform that allows customers to fine-tune the performance of its processors. Nvidia has been investing in this software since the mid-2000s, and has long encouraged developers to use it to build and test AI applications. This has made Cuda the de facto industry standard.

Nvidia’s juicy profit margins and the rapid growth of the AI accelerator market — projected to reach $400bn per year by 2027 — have attracted competitors.

Amazon and Alphabet are crafting AI chips for their data centres. Other big chipmakers and startups also want a slice of Nvidia’s business.

In December 2023 Advanced Micro Devices, another chipmaker, unveiled a chip that by some measures is roughly twice as powerful as Nvidia’s most advanced chip.

But even building better hardware may not be enough.

Nvidia dominates AI chipmaking because it offers the best chips, the best networking kit and the best software.

Any competitor hoping to displace the semiconductor behemoth will need to beat it in all three areas. That will be a tall order.