Meta murders: Expert blames social media algorithms for trapping young users in cycle of violent content

For the Instagram generation, the brutal truth is that violent content and horrific footage they’re not ready to cope with is virtually inescapable.

For the Instagram generation, the brutal truth is that violent content and horrific footage they’re not ready to cope with is virtually inescapable.

And that’s because the algorithms used by companies such as Meta make sure you are sent back to the gore that may have flashed up on your feed.

That’s the view of Curtin University Professor of Internet Studies Tama Leaver who says social media algorithms are making it difficult for children to escape dark holes on the internet filled with violent content.

Sign up to The Nightly's newsletters.

Get the first look at the digital newspaper, curated daily stories and breaking headlines delivered to your inbox.

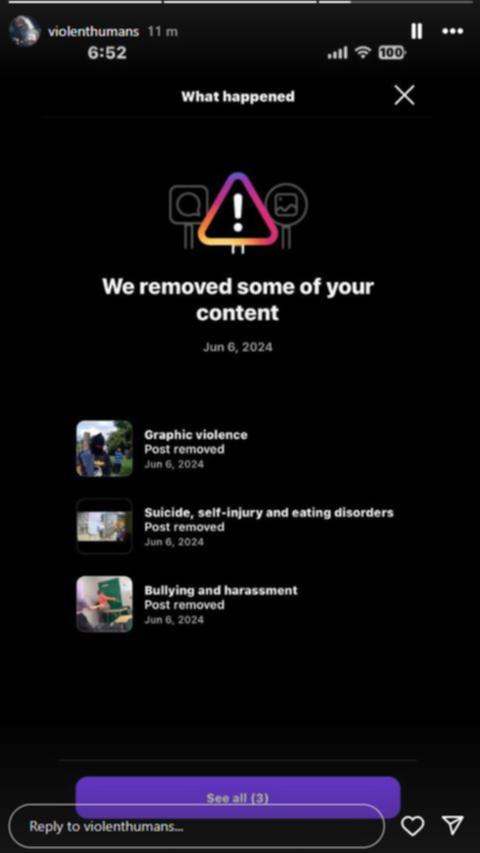

By continuing you agree to our Terms and Privacy Policy.It comes after The Nightly spoke to a 20-year-old man who revealed how he gamed Instagram’s algorithm to push out violent videos that breached the platform’s community guidelines.

The American man said he felt no remorse if kids came across the graphic material — which included images of murder and assaults.

One account, which has been taken down, had reached an audience of more than 260,000 Australians in just 30 days. At the time of publication, he had at least four accounts — one of which had 116,000 followers.

Mr Leaver told The Nightly that poor auto-detection and algorithms’ inability to effectively differentiate real and fake violence was keeping harmful content on platforms, including Meta’s Instagram.

“The content that you can access as a 13-year-old is the same content you can get access to as an adult — so there is some inherent risk in that,” he said.

“If you do have a young person who ends up being curious about something to do with violence, guns or crime (just) once, then the algorithm is probably pleased to return them to that content the next time they turn up — even if it’s not what they’re looking for.

“There is some danger in sort of skewing what you end up watching quite significantly and it takes some time to escape (the content).

“These dark holes that you might be heading down are (driven) by amplification and algorithms . . . they mean that you see the same sort of content more than you would in other more balanced media formats.”

Mr Leaver said he believed X, formerly Twitter, housed the “most harmful” content since billionaire Elon Musk took over and removed safeguards to create a platform that fosters free speech.

“Meta and the Instagram products tend to be the ones that sound like they’re going to be the most reliable and the most well-policed, but we also know they play hard and fast with their own rules sometimes,” he said.

“Sometimes they need an explicit trigger to apply their own rules . . . the sale of vaping products is explicitly not allowable and yet, it doesn’t take long to find that sort of content, for example.”

Revelations that the young American man was creating online communities — which attracted followers to a public account, before turning it private, was common, said Mr Leaver.

In a confronting special investigation series by The West — which has exposed how footage of murders, mutilation and torture are slipping unprompted into feeds — experts have revealed how children are potentially being ‘normalised” to such content and that it could lead to major psychological trauma.

Australian Psychological Association President Dr Catriona Davis-McCabe said while the amount of time kids spent online was already worrying, the nature of the content they were exposed to was even more concerning.

“This is a massive risk for young people because they are now able to view things online that their brains are not developed enough to be witnessing, and it can be incredibly traumatic for them,” Dr Davis-McCabe said.

For Mr Leaver, harmful online communities — not just related to violent content — were capturing the attention of the younger generation.

“Once a community reaches a certain number, usually a number that can be profitable, it moves into a closed space where the community sort of reinforces itself for whatever reason,” he said.

“It’s everything from delusions of healthy influences all the way through to other communities of harm.

“(And) once they are closed, then obviously that mechanism of reporting means that it’s unlikely people are going to be reporting that content because they’ve explicitly chosen to be there.”

Instagram’s policies state human moderators are alerted to potentially harmful content after its algorithm has difficulty deciphering its impact.

But with the large number of posts being pumped online every day, and considering the politicisation of confronting material, shielding people from potential harm has become difficult.

“Meta has to rely on its algorithmic systems to flag obvious examples — so if you were posting obvious nudity, for example, then the system would probably pick that up.

“But the grey areas are vast, and I think that’s the hard stuff — where, if nobody’s flagging it, it’s harder to think that the system is going to ultimately find that, especially if the violence is contextual, or the content is contextual in the way that it’s harming people.

“Things that are extremely racist in one context could be harmless in another. And it’s the speed in which things get seen before people flag it that is often the challenge.”

Instagram stories, the main feed or reels feature — which was created after the success of TikTok’s For You Page — were all driving the growth of potentially harmful online communities, says Mr Leaver.

“Instagram is a platform where things like activism or things like grabbing attention are easiest to do because it’s so visual,” he said. “It’s also the case that Instagram’s probably the most diversified.

“There are a lot of types of content forms in there, but it also means there are enormous communities in there for almost pretty much every imaginable content type, except (usually) explicitly adult content.

“While there is a lot of algorithmic stuff in the background, users flagging content for review is probably (the) most important because visuals are harder to unpack and understand than just primarily textual content.”