SXSW Sydney: Can AI replace a human judge?

Humans are laden with conscious and unconscious biases. Could artificial intelligence deliver a fairer justice system?

A lawyer, a judge and an ethicist walk into a bar. OK, not a bar, but a bland conference room at Sydney’s convention centre.

It’s not a joke, although the upcoming Chris Pratt movie Mercy might be. The trio and the Pratt flick are both looking at the same modern conundrum – can AI be relied on to be judge, jury and executioner?

In the Hollywood version to be released in January, Pratt plays an LA detective who is accused of murdering his wife. He has 90 minutes to prove his innocence, not to 12 humans who couldn’t get out of jury duty, but to an AI judge, who will determine his guilt based on inputs including his testimony.

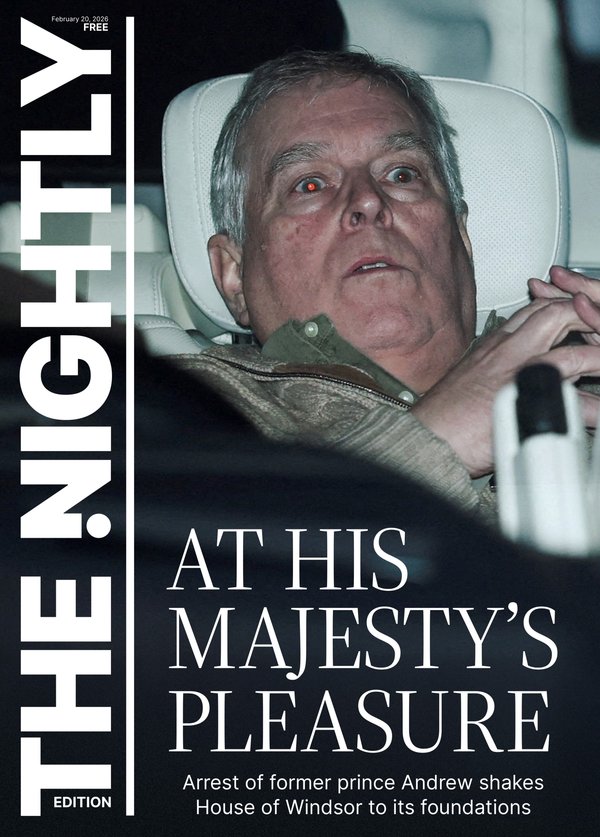

Sign up to The Nightly's newsletters.

Get the first look at the digital newspaper, curated daily stories and breaking headlines delivered to your inbox.

By continuing you agree to our Terms and Privacy Policy.If the likelihood of his guilt, as determined by an AI that looks like Rebecca Ferguson, falls below the 92 per cent threshold, he will be executed. No appeals.

Sounds dystopian and very Hollywood. But the reason that story exists – or will – is because there is genuine apprehension and fear in the community about the role AI will play in our future across a range of areas, including in the law.

When there’s something as precious as human liberty and freedom at stake, it tends to evoke a lot of emotions.

So, a lawyer, a judge and an ethicist debated this scenario today at SXSW Sydney at a session called AI as judge, jury and executioner: the ethics of automated sentencing.

Judge Ellen Skinner of the NSW District Court, who is also President of the Children’s Court who was speaking only for herself and not the wider judiciary, Lander & Rogers partner and technology lawyer Matt McMillan and ethicist Peter Collins.

“The justice system tends to enforce a moral community and moral communities have people inside them and outside them, and laws set up by the people who are outside the moral community,” Judge Skinner said. “When people come up before us, they, to some extent, want something like absolution in, ‘I see you, you belong to our community’.”

Where AI could be used to supplement the work of the existing system,” Judge Skinner contended, “is how it could improve the quality of the decisions by receiving better information that is evidence-based about what risk predictions might look like.”

She said in areas such as bail sentencing, this could be particularly useful.

But the watchword when it comes to AI and justice is “bias”.

Judge Skinner expanded on this point, “We would need to partner quite carefully. The judiciary would need to be transparent, that we would need to make sure that we’re inputting into an AI model is a set of facts that are reliable, credible and then we use the AI model to extrapolate from those facts information that then we could rely on to assist us in decision-making.

While there are efficiencies to be gained, Judge Skinner emphasised that “there is still something very significant about human connection”, especially if the decisions made were to have any legitimacy.

Collins, the ethicist, pointed out that humans, including judges and juries, are laden with their own biases, whereas AI can actually be “more ethical” than human beings.

There are also positive biases, positive discrimination that can be input into AI systems, for decision-making, to reflect shifting community attitudes in areas of the law, such as domestic violence.

“So, there are pros and cons to the bias that may be what the community wants, that our humanity comes from our biases,” Judge Skinner added.

McMillan pointed out that biases can be a double-edged sword. “AI absolutely has the power to remove that unconscious bias, be more evidence-based, but if you have algorithms which are trained on historical data, which have had system inequality, then you have that issue of that bias being replicated or amplified.”

McMillan said we had to be conscious of what are the inputs into the AI system, what were the guardrails, what are the outputs, and what are the confidence levels around those outputs?

He said, “Machines aren’t there to replace judges. I tend to look at it for judges a bit like the GPS system. It can provide you with helpful directions but the driver remains responsible.

“I see AI in the future as being a little bit like an intelligent sparring partner, which challenges, which can help surface ideas, which can help identify outliers, but ultimately it’s the human, that’s the decision maker.”